⏱️ In one minute: In GCP-VOs contribution-funded projects, “reporting” can include more than a narrative. If your Workplan promises an output (manual, toolkit, curriculum, research-style report), you need to plan the time, budget, and evidence trail to deliver that asset in a usable, review-ready format.

🗓️ Still applying? Start here first:

➡️ GCP-VOs Call: What to Submit (and How to Avoid Reporting Traps)

🔍 What the “finish line” really is

Teams often treat close-out as “write the final report.” But the finish line is usually deliverables + documentation + evidence, aligned to what you committed to produce.

That’s why you can run a great program and still feel blindsided at the end.

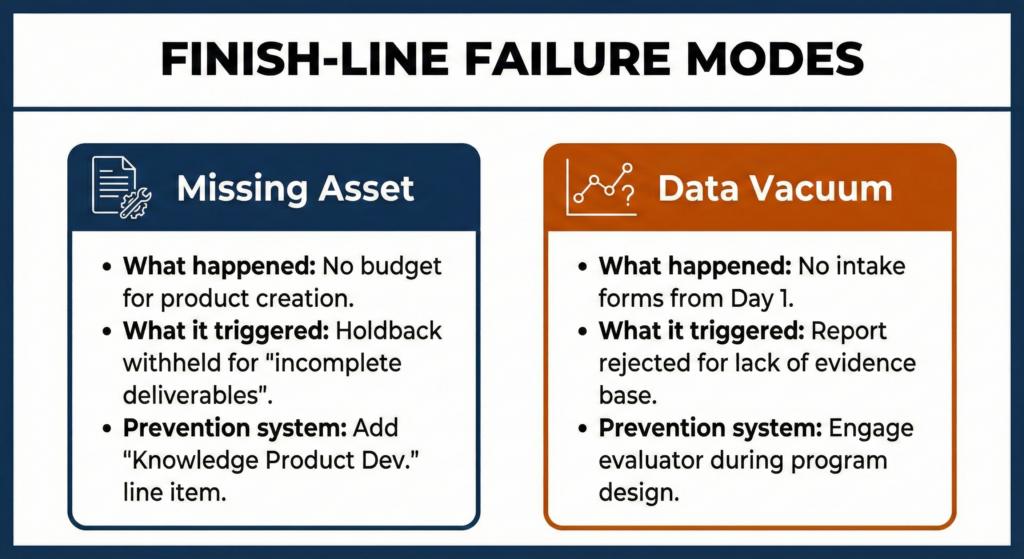

🧩 Case Study 1: The “Missing Asset” close-out scramble (anonymized, true pattern)

🎯 The project: A rural coalition delivered a peer-support reintegration initiative. Their Workplan committed to both direct services and an Implementation Manual intended to help other communities replicate the model.

✅ What went right: Services were delivered strongly. Participants benefited. Partnerships held.

❌ What went wrong: The manual was treated as a “later” task. At close-out, they submitted a short lessons-learned summary rather than the promised implementation manual.

🧨 What it triggered: The file was treated as incomplete until the promised asset existed in a review-ready form. Leaders scrambled after delivery ended—when staff were tired and details were harder to capture.

🛠️ Prevention system (copy/paste into your Workplan):

✅ Add a task: “Manual drafting + validation”

✅ Set two milestones: mid-project outline + late-project draft

✅ Schedule two structured staff interviews while delivery is active

✅ Budget writing + layout + accessibility formatting

📊 Case Study 2: The “Data Vacuum” (you can’t reverse-engineer evidence)

🎯 The project: A community agency supported women facing barriers to employment. Their application promised a GBA+ barriers report to inform future policy and practice.

✅ What went right: The team delivered real client support and collected compelling success stories.

❌ What went wrong: They didn’t set up disaggregated data intake from Day 1 (even basic fields like age range, barrier categories, caregiver status). At close-out, they had anecdotes—but not an evidence base.

🧨 What it triggered: The “report” couldn’t meet its intended purpose. This commonly leads to extra follow-up questions, delayed acceptance of close-out deliverables, and a file perceived as higher administrative risk in future.

🛠️ Prevention system (minimum viable evaluation):

✅ Build intake questions before service starts (with informed consent)

✅ Collect only what you need; store it securely

✅ Run a monthly data check (10 minutes) to catch gaps early

✅ If promising a research-style output, budget evaluation support

🛠️ The fix: a simple “Deliverables + Evidence Map”

Before delivery begins, build one page that includes:

📌 Deliverables list (services + products)

✅ Acceptance criteria (what “done” looks like)

📊 Data plan (what you track, cadence, owner, storage)

🗂️ Evidence log (where proof lives for claims + reporting)

This one page prevents most finish-line chaos.

🧭 How we help (post-award)

If you’re funded and want to avoid end-of-project scramble, we support:

🗓️ a reporting calendar,

🗂️ a claims-ready evidence trail,

📊 KPI tracking and narrative reporting that matches your agreement.